Warning

This portion of the book is under construction, not ready to be read

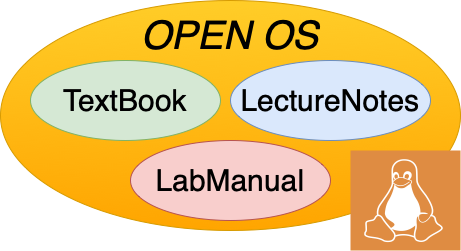

34. Introduction to Concurrency, Synchronization and Deadlock#

Earlier, we saw how computers can virtualize their CPUs through the use of threads. Virtualizing the CPU makes it seem like there are multiple CPUs running at the same time to the user. However, when a process requires threads to be ran in a certain order, we run into problems such as race conditions, deadlocks, and security. One of the key responsibilities of an operating system is that of synchronization—handling concurrent events in a reasonable way, and providing mechanisms for user applications to do so as well.

interprocess communication and need to share

system calls to enable: shared memory region, shmat, (reference back pipes)

short statement we have parallel hardware, lots of cores…

leads to threads from the perspective of we want work on same data…

locking

deadlock

challenge modern hardware

NUMA, caches…

sequential consistency, processor consistency… C++11 memory model (possible part 4)

scalability, lock granularity

locking in linux